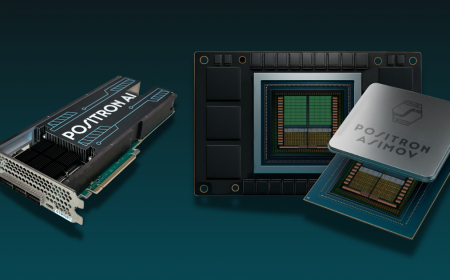

Amazon Releases an Impressive New AI Chip and Teases an Nvidia-Friendly Roadmap

AWS unveils its new Trainium3 AI chip with major performance gains and previews Trainium4, which will support Nvidia NVLink Fusion for hybrid AI training systems.

Amazon Web Services — which has been building its own AI training chips for several years — unveiled its newest model, Trainium3, boasting significant performance gains. The announcement was made on Tuesday at AWS re: Invent 2025, where the company also previewed its next AI training chip, Trainium4, which is already underway and will be compatible with Nvidia hardware.

During the event, AWS introduced the Trainium3 UltraServer, a system powered by AWS’s advanced 3-nanometer Trainium3 chip and its proprietary networking technology. As expected, the third-generation chip and server deliver substantial improvements in AI training and inference performance compared to the previous generation.

According to AWS, the system is over 4x faster and provides 4x more memory, enhancing both AI training efficiency and delivery of high-demand AI applications. Up to 1 million Trainium3 chips can be interconnected by linking thousands of UltraServers — a 10x increase over the prior generation. Each UltraServer supports 144 chips, the company said.

AWS also emphasised energy efficiency, claiming the new chips and systems use 40% less energy than the previous version. At a time when global demand for massive, power-hungry data centres continues to surge, AWS is working to produce systems that reduce power consumption rather than increase it.

This strategy not only benefits the company’s infrastructure but also aligns with Amazon’s cost-focused philosophy by helping AI cloud customers save money.

Companies such as Anthropic (which Amazon has invested in), Japan’s LLM Karakuri, SplashMusic, and Decart have been using Trainium3 and its associated systems and have reportedly lowered their inference costs as a result.

AWS also offered a glimpse of its future hardware plans by previewing Trainium4, which is currently in development. The upcoming chip is expected to deliver another substantial performance leap and will support Nvidia’s NVLink Fusion high-speed interconnect technology.

This compatibility means Trainium4-powered systems will be able to operate alongside Nvidia GPUs, expanding their overall performance capabilities while maintaining Amazon’s more affordable server infrastructure.

It’s also notable that Nvidia’s CUDA ecosystem has become the dominant standard for AI development. With Trainium4 integrating more smoothly with Nvidia technologies, AWS may find it easier to attract large-scale AI applications built initially for Nvidia GPUs to its cloud.

Amazon has not announced a release timeline for Trainium4. If the company follows past rollout patterns, more details will likely be shared at next year’s re: Invent conference.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0