Google’s Gemini 3 Processes Over 1 Trillion Tokens Per Day Amidst Competition with OpenAI

Google has launched its new Gemini 3 Flash model, which will become the default model in the Gemini app and AI mode in search. The model offers significant performance improvements, outperforming its predecessor and competing with other leading AI models. It's designed to be faster, cheaper, and better at handling multimodal content.

Google unveiled its new Gemini 3 Flash model today, a faster, more affordable version of the Gemini 3 released last month. This new model will be the default in the Gemini app and AI mode in Google Search, marking a strategic move to capture more attention in the AI space and compete with OpenAI’s offerings.

The Gemini 3 Flash model is available just six months after Google launched the Gemini 2.5 Flash, delivering significant performance improvements. Across benchmarks, the Gemini 3 Flash model outperforms its predecessor and matches the performance of other cutting-edge models, including Gemini 3 Pro and OpenAI’s GPT-5.2, in certain areas.

For example, the Gemini 3 Flash model scored 33.7% without tool use on Humanity’s Last Exam benchmark, which tests expertise across multiple domains. In comparison, Gemini 3 Pro scored 37.5%, Gemini 2.5 Flash scored 11%, and the newly released GPT-5.2 scored 34.5%. On the multimodality and reasoning benchmark MMMU-Pro, the new model outperformed all competitors with an impressive score of 81.2%.

Consumer Rollout

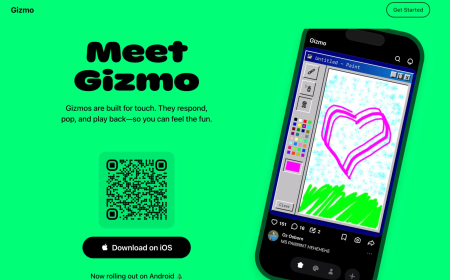

Google is making Gemini 3 Flash the default model globally in the Gemini app, replacing Gemini 2.5 Flash. Users can still select the Pro model in the model picker for tasks such as math and coding. The company claims that the new Flash model excels at identifying multimodal content and generating responses based on inputs such as videos, sketches, and audio recordings.

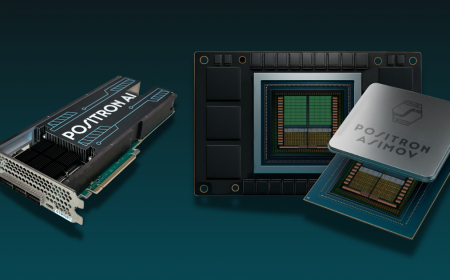

Image Credits: Google

For example, users can upload a short video from a pickleball match, request tips, sketch a drawing for the model to interpret, or submit an audio file for analysis or quiz generation. The model also improves its understanding of user intent and can generate more visual responses, including images and tables.

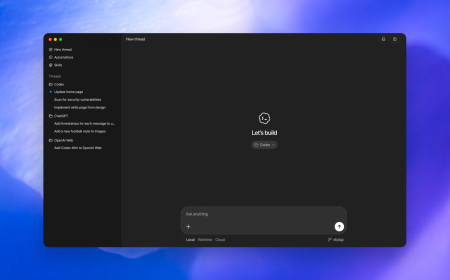

Additionally, the Gemini 3 Flash model allows users to create app prototypes directly in the Gemini app using prompts. The Gemini 3 Pro is now available to everyone in the U.S. for search, and the Nano Banana Pro image model is now accessible to more people in the U.S. as well.

Enterprise and Developer Availability

Several companies, including JetBrains, Figma, Cursor, Harvey, and Latitude, are already using the Gemini 3 Flash model, which is available through Vertex AI and Gemini Enterprise.

For developers, the model is available in a preview through the API and Antigravity, Google’s new coding tool released last month. The company reported that the Gemini 3 Pro model scored 78% on the SWE-bench verified coding benchmark, only trailing GPT-5.2. It is particularly well-suited for tasks such as video analysis, data extraction, and visual Q&A, with its speed making it ideal for quick, repeatable workflows.

Pricing and Performance

The pricing for the new model is set at $0.50 per 1 million input tokens and $3.00 per 1 million output tokens. This is slightly more expensive than the previous Gemini 2.5 Flash model, which cost $0.30 per 1 million input tokens and $2.50 per 1 million output tokens. However, Google claims the new Gemini 3 Flash model outperforms the Gemini 2.5 Pro model and is three times faster. For specific tasks, the latest version uses 30% fewer tokens on average than the traditional version, potentially resulting in cost savings.

“We really position Flash as more of your workhorse model,” said Tulsee Doshi, senior director and head of Product for Gemini Models, in a briefing with TechCrunch. “So if you look at, for example, even the input and output prices at the top of this table, Flash is just a much cheaper offering from an input and output price perspective. And so it actually allows for many companies to handle bulk tasks.”

Competition with OpenAI

Since the release of Gemini 3, Google has been processing over 1 trillion tokens per day on its API, continuing its race with OpenAI. Earlier this month, Sam Altman, CEO of OpenAI, reportedly sent a “Code Red” memo to the OpenAI team after noticing a decline in ChatGPT’s traffic. At the same time, Google’s market share among consumers grew. In response, OpenAI launched GPT-5.2 and a new image generation model, and reported that ChatGPT messages have grown 8x since November 2024.

Though Google didn’t directly address its competition with OpenAI, it emphasised that the release of new models is challenging all companies in the space to stay active and competitive.

“Just about what’s happening across the industry is like all of these models are continuing to be awesome, challenge each other, push the frontier. And I think what’s also awesome is as companies are releasing these models,” Doshi said. “We’re also introducing new benchmarks and new ways of evaluating these models. And so that

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0