China’s Moonshot Releases Open-Source Multimodal Model Kimi K2.5

Moonshot AI has launched Kimi K2.5, an open-source multimodal AI model that handles text, images, video, and coding tasks, with performance competitive with leading proprietary systems.

China-based Moonshot AI has released a new open-source model, Kimi K2.5, as part of its push into large-scale multimodal AI and developer tools.

Backed by major investors including Alibaba and HongShan (formerly Sequoia China), Moonshot said Kimi K2.5 is a natively multimodal model capable of understanding and reasoning across text, images, and video.

According to the company, Kimi K2.5 was trained on roughly 15 trillion mixed visual and text tokens. That training approach enables the model to handle a wide range of tasks, including complex coding workflows and multi-agent coordination, in which several AI agents collaborate to complete a task. Moonshot said benchmark results show Kimi K2.5 performing on par with, and in some cases surpassing, leading proprietary models.

In coding evaluations, the model outperformed Gemini 3 Pro on the SWE-Bench Verified benchmark and scored higher than GPT-5.2 and Gemini 3 Pro on the SWE-Bench Multilingual benchmark. For video understanding, Kimi K2.5 Outperformed GPT-5.2 and Claude Opus 4.5 on VideoMMMU, a benchmark that measures how well models reason over video content.

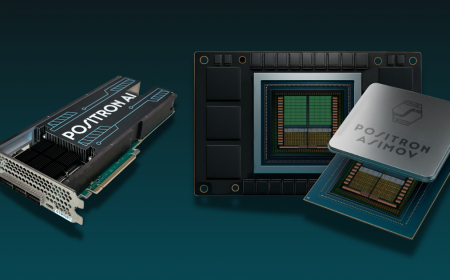

Image Credits: Moonshot AI

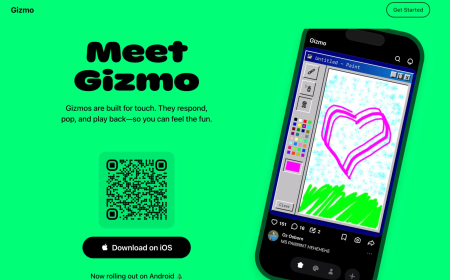

Moonshot said the model’s coding capabilities go beyond text-based prompts. Developers can provide images or videos and ask Kimi K2.5 to recreate or adapt interfaces shown in those media files, enabling more visual and contextual programming workflows.

Here's a short video from our founder, Zhilin Yang.

(It's his first time speaking on camera like this, and he really wanted to share Kimi K2.5 with you!) pic.twitter.com/2uDSOjCjly — Kimi.ai (@Kimi_Moonshot) January 27, 2026

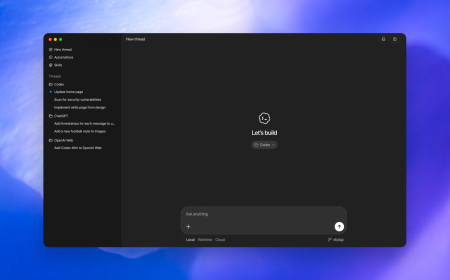

To make those capabilities more straightforward to use, Moonshot has also launched Kimi Code, an open-source coding agent designed to compete with tools like Anthropic’s Claude Code and Google’s Gemini CLI. Kimi Code can be run directly from the terminal or integrated into popular development environments such as VS Code, Cursor, and Zed. The company said developers can use text, images, and video as inputs when working with the tool.

Coding-focused AI tools have rapidly gained traction and are becoming major revenue drivers for AI labs. Anthropic said in November that Claude Code had reached $1 billion in annualised recurring revenue, and Wired reported earlier this month that the tool added another $100 million by the end of 2025. Meanwhile, Moonshot faces growing competition at home: Chinese rival DeepSeek is expected to release a new model with strong coding capabilities next month, according to The Information.

Yang Zhilin, a former researcher at Google and Meta AI, founded Moonshot AI. The company raised $1 billion in a Series B round at a $2.5 billion valuation. Bloomberg reported that Moonshot secured an additional $500 million last month at a $4.3 billion valuation and is already exploring another funding round that could value the company at $5 billion.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0