Google DeepMind opens access to Project Genie, its AI tool for building interactive game worlds

Google DeepMind is opening access to Project Genie, an experimental AI tool that creates interactive game worlds from text prompts or images, starting with U.S. subscribers.

Google DeepMind is opening broader access to Project Genie, its experimental AI system that can generate interactive game worlds from text prompts or images.

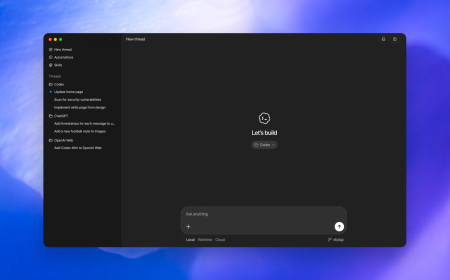

Beginning Thursday, Google AI Ultra subscribers in the U.S. will be able to try the research prototype, which is powered by a combination of DeepMind’s latest world model, Genie 3, its image-generation model Nano Banana Pro, and Gemini.

The release comes roughly five months after Genie 3’s initial research preview. It reflects DeepMind’s broader effort to collect user feedback and training data as it races to build more advanced world models.

World models are AI systems that create internal representations of environments, enabling them to predict future states and plan actions. Many AI leaders, including those at DeepMind, believe world models are a key step toward artificial general intelligence (AGI). In the near term, however, DeepMind sees commercial opportunities starting with video games and entertainment, eventually expanding into training embodied agents, such as robots, inside simulated environments.

DeepMind’s move arrives as competition in world models intensifies. Fei-Fei Li’s World Labs released its first commercial product, Marble, late last year. AI video startup Runway has also launched a world model, while former Meta chief scientist Yann LeCun’s startup AMI Labs is focusing heavily on the same area.

“I think it’s exciting to be in a place where we can have more people access it and give us feedback,” said Shlomi Fruchter, a research director at DeepMind, speaking to TechCrunch via video interview.

DeepMind researchers were clear that Project Genie remains highly experimental. In some cases, it produces impressive, playable environments; in others, it falls short of expectations.

To get started, users create a “world sketch” by entering text prompts that describe both the environment and a main character, which can later be navigated in either first- or third-person view. Nano Banana Pro generates an image from those prompts, which users can attempt to modify before Genie turns it into an interactive world. While edits often worked, the system occasionally made mistakes, such as unexpectedly changing hair colour.

Users can also upload real-world photos as a base for world generation, with mixed results. Once the image is finalised, Project Genie takes a few seconds to generate an explorable environment. Users can remix existing worlds, browse curated creations in a gallery, or use a randomiser for inspiration. The system also allows users to download videos of the worlds they explore.

At launch, DeepMind is limiting each session to 60 seconds of world generation and navigation. The restriction is partly due to computational costs, since Genie 3 uses an auto-regressive architecture that requires dedicated hardware resources for each session.

“The reason we limit it to 60 seconds is because we wanted to bring it to more users,” Fruchter said. “When you’re using it, there’s a chip somewhere that’s only yours and it’s being dedicated to your session.”

He added that extending sessions beyond that limit would not significantly increase the value of early testing, given current interaction constraints.

In practice, Project Genie shines most when generating whimsical or artistic environments. The system performed exceptionally well with styles such as clay animation, anime, watercolours, and classic cartoons. Attempts at photorealistic or cinematic scenes were less successful, often resembling video game graphics rather than lifelike environments.

When fed real photos, results varied. A photo of an office produced a loosely similar layout but felt sterile and digital. In another test, a stuffed toy on a desk was animated to navigate its surroundings, with surrounding objects occasionally reacting to its input.

Interactivity remains a work in progress. Characters sometimes passed through walls or solid objects, and movement controls — which rely on standard gaming keys like W-A-S-D and arrow keys — could be unresponsive or erratic. Navigating simple spaces often became awkward and imprecise.

Still, the system showed signs of spatial memory. When revisiting previously generated areas, the environment usually remained consistent, though occasional discrepancies appeared.

Fruchter acknowledged these shortcomings, emphasising that Project Genie is not intended to be a polished, daily-use product. Instead, he described it as an early glimpse into what future world models could enable.

“We don’t think about this as an end-to-end product,” he said. “But there’s already something interesting and unique here that can’t really be done another way.”

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0