A new version of OpenAI’s Codex is powered by a new dedicated chip

OpenAI has introduced a new version of Codex powered by a dedicated chip, aiming to boost performance, efficiency, and scalability for AI-powered coding tools.

On Thursday, OpenAI introduced a streamlined version of its agentic coding assistant Codex, expanding on the latest model unveiled earlier this month. The new release, GPT-5.3-Codex-Spark, is positioned as a lighter-weight counterpart to the existing 5.3 model, optimised for faster inference. To support those speed improvements, OpenAI is leveraging a dedicated chip from its hardware partner, Cerebras, signalling deeper integration between the two companies at the infrastructure level.

The collaboration between OpenAI and Cerebras was first revealed last month, when OpenAI disclosed a multi-year agreement valued at more than $10 billion. At the time, the company said that incorporating Cerebras into its compute portfolio was intended to accelerate response times for its AI systems significantly. OpenAI now describes Spark as the “first milestone” in that broader partnership.

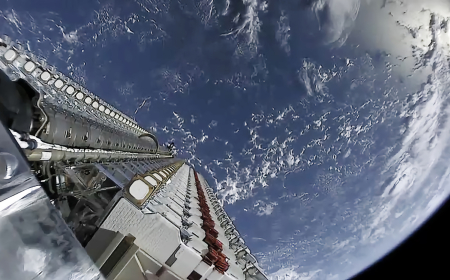

Codex-Spark is designed to enable rapid iteration and real-time collaboration. It runs on Cerebras’ Wafer Scale Engine 3, the company’s third-generation wafer-scale processor that integrates 4 trillion transistors into a single megachip. OpenAI characterises Spark as a tool intended for everyday productivity use cases, such as rapid prototyping and shorter coding tasks, rather than the more computationally intensive, extended workloads handled by the standard GPT-5.3 model. The lightweight version is currently available in a research preview to ChatGPT Pro subscribers through the Codex app.

Ahead of the official announcement, OpenAI CEO Sam Altman appeared to tease the launch on social media. “We have a special thing launching to Codex users on the Pro plan later today,” Altman wrote. “It sparks joy for me.”

In its formal release statement, OpenAI underscored Spark’s focus on minimising latency. “Codex-Spark is the first step toward a Codex that works in two complementary modes: real-time collaboration when you want rapid iteration, and long-running tasks when you need deeper reasoning and execution,” the company said. It added that Cerebras’ hardware is particularly well-suited to workflows requiring extremely low latency.

Cerebras, founded more than a decade ago, has gained increased visibility during the recent surge in AI development. Just last week, the company announced it had secured $1 billion in new funding at a $23 billion valuation. Cerebras has also previously signalled plans to pursue an initial public offering.

“What excites us most about GPT-5.3-Codex-Spark is partnering with OpenAI and the developer community to discover what fast inference makes possible new interaction patterns, new use cases, and a fundamentally different model experience,” said Sean Lie, CTO and co-founder of Cerebras, in a statement. “This preview is just the beginning.”

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0