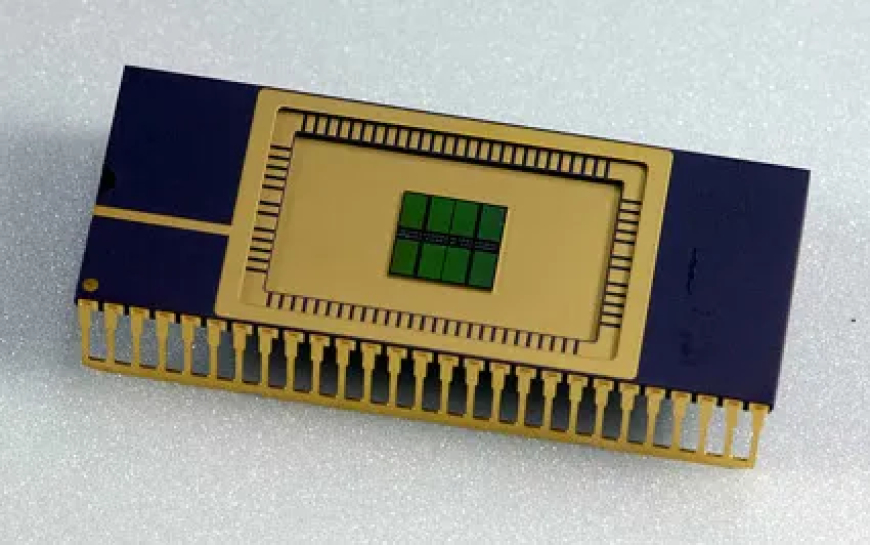

Running AI models is turning into a memory game

Running AI models increasingly depends on high-bandwidth memory, GPU capacity, and advanced chip design as developers race to overcome bottlenecks in large-scale AI workloads.

When people break down the cost of AI infrastructure, the spotlight usually lands on Nvidia and GPUs — but memory is quickly becoming just as central to the story. As hyperscalers prepare to pour billions of dollars into new data centre buildouts, the price of DRAM has reportedly surged by about 7x over the past year.

At the same time, a new discipline is taking shape around coordinating that memory so the right context reaches the right agent at exactly the right moment. The teams that get this right can run the same kinds of queries using fewer tokens, and that efficiency can be the line between scaling up and shutting down.

Semiconductor analyst Doug O’Laughlin has a thoughtful look at why memory chips matter on his Substack, where he speaks with Val Bercovici, chief AI officer at Weka. They both come from the semiconductor world, so the discussion leans heavily toward the hardware side — but the ripple effects for AI software are just as meaningful.

One section that stood out was Bercovici’s take on how much more complicated Anthropic’s prompt-caching guidance has become:

The test is whether we go to Anthropic’s prompt caching pricing page. It started as a very simple page six or seven months ago, especially as Claude Code was launching — “use caching, it’s cheaper.” Now it’s an encyclopedia of advice on exactly how many cache writes to pre-buy. You’ve got 5-minute tiers, which are very common across the industry, or 1-hour tiers, and nothing above. That’s a really important tell. Then, of course, you’ve got all sorts of arbitrage opportunities around the pricing for cache reads based on how many cache writes you’ve pre-purchased.

What this really points to is how long Claude keeps your prompt in its cache: you can pay for a 5-minute window, or spend more for an hour-long one. Using information that’s still sitting in cache is far cheaper, so if you manage it well, the savings can be huge. But there’s a trade-off: every new piece of data you add to a query can push something else out of that cache window.

It’s technical and messy, but the takeaway is pretty clear: memory management will be a major lever in the next phase of AI. The companies that learn to orchestrate memory effectively will have a real advantage, and many will climb to the top because of it.

There’s also plenty of room left to innovate across this emerging field. Back in October, I wrote about a startup called Tensormesh that’s working on one layer of the stack: cache optimisation.

And the opportunity isn’t limited to a single layer. Deeper down the stack, data centres are still figuring out how to use the different kinds of memory they already have. (The interview includes a strong discussion of when DRAM is used instead of HBM, though it gets pretty far into the hardware weeds.) Higher up the stack, end users are experimenting with how to organise model swarms to benefit from shared caching.

As memory orchestration improves, token usage will drop, and inference will get cheaper. At the same time, models keep becoming more efficient per token, pushing costs even lower. As server costs fall, a lot of applications that don’t look profitable today will start inching into viability — and eventually, into real margins.

Tags:

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0